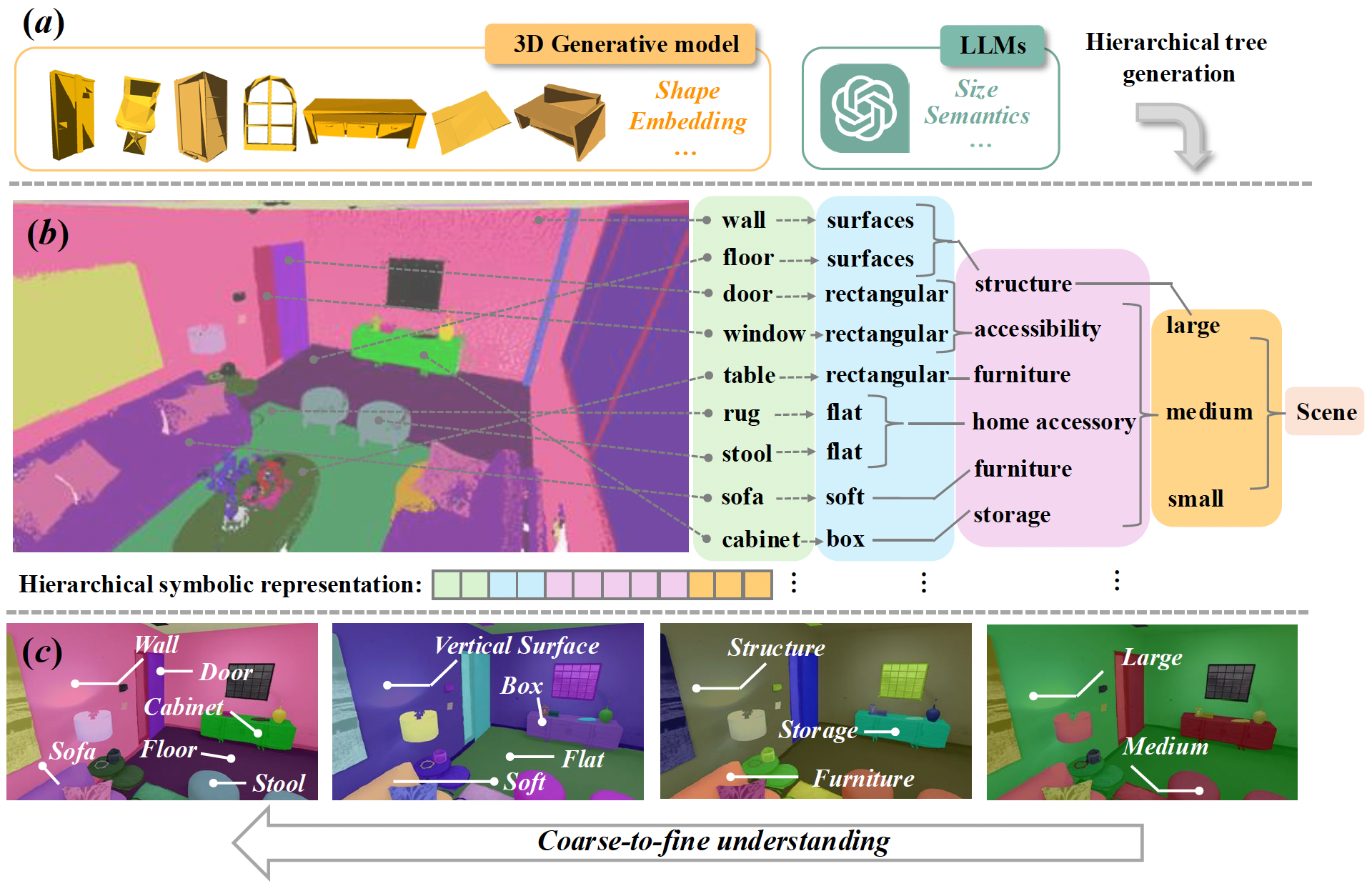

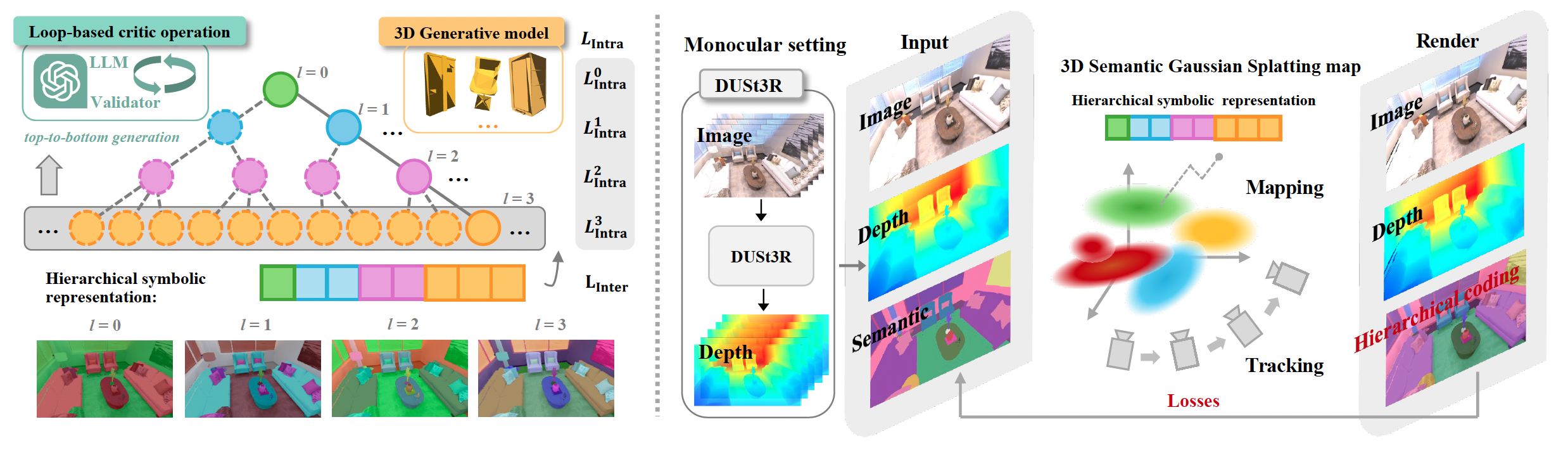

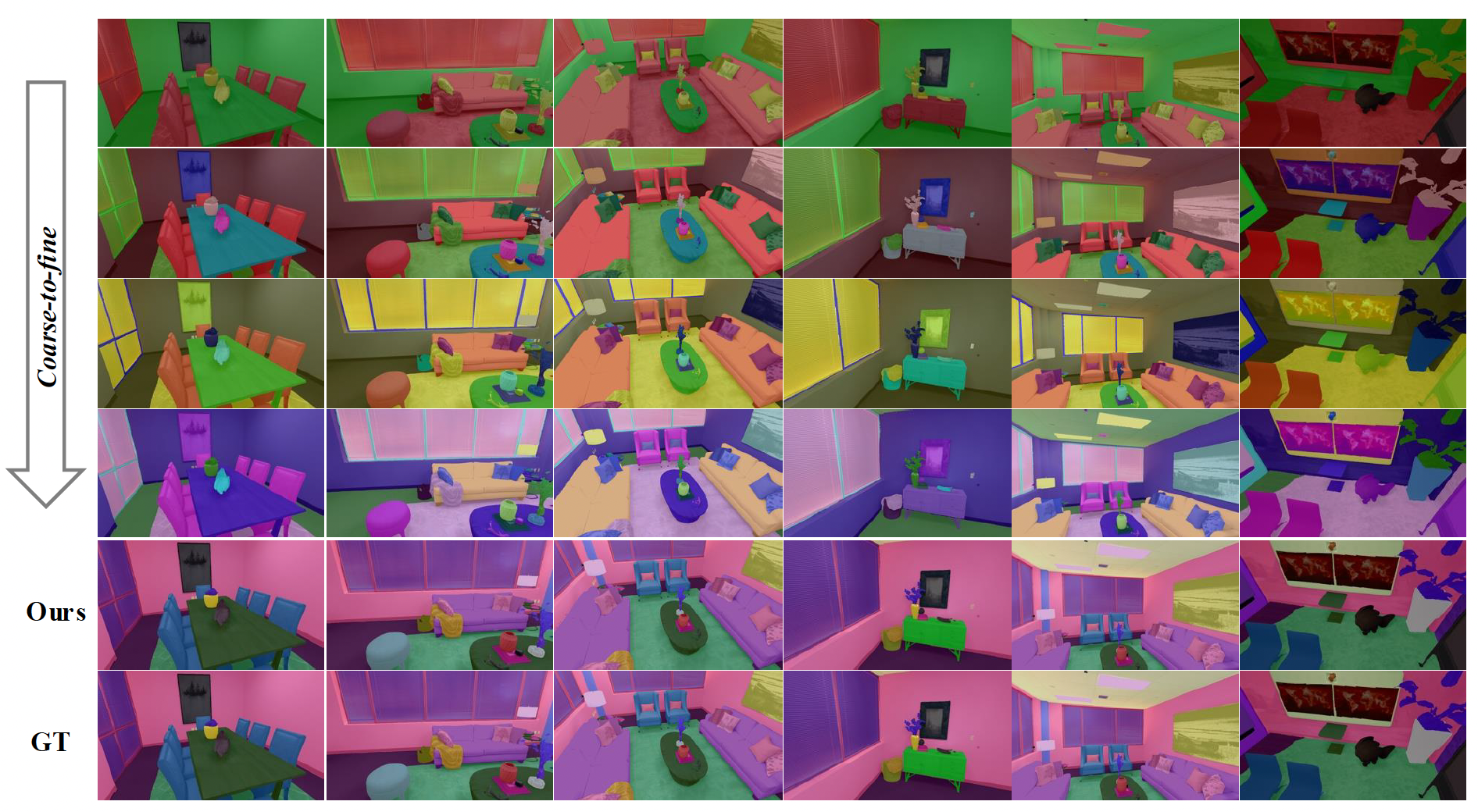

Within Hier-SLAM++, we model the semantic space as a hierarchical tree, where semantic information is represented as a root-to-leaf hierarchical symbolic structure. This representation not only comprehensively captures the hierarchical attributes of semantic information, but also effectively compresses storage requirements, while enhancing semantic understanding with valuable scaling-up capability. To construct an effective tree, we leverage both LLMs and 3D Generative Models to integrate semantic and geometric information. Next, each 3D Gaussian primitive is augmented with a hierarchical semantic embedding code, which can be learned in an endto-end manner through the proposed Inter-level and Crosslevel semantic losses. Furthermore, our method is extended to support monocular input by incorporating geometric priors extracted from the feed-forward method, thereby eliminating the dependency on depth information